While innovation in Silicon Valley remains ever-changing, one thing stays the same – emerging technologies can only go so far before they need help.

That phenomenon happened with Uber Technologies Inc., which saw mass adoption long before labor laws could scramble to understand and legislate the newly coined “gig work” sector. It happened with psychedelic drugs, when developing a new kind of medication also meant educating therapists and achieving buy-in from the insurance sector.

Now, it’s generative artificial intelligence. Tech giants have waxed poetic about AI’s ability to fundamentally alter industries. A KPMG survey of companies with $1 billion in revenue found that 97% said they were investing in AI over the course of the year. And globally, AI companies have raised nearly $315 billion so far in 2025, a new all-time record for the sector.

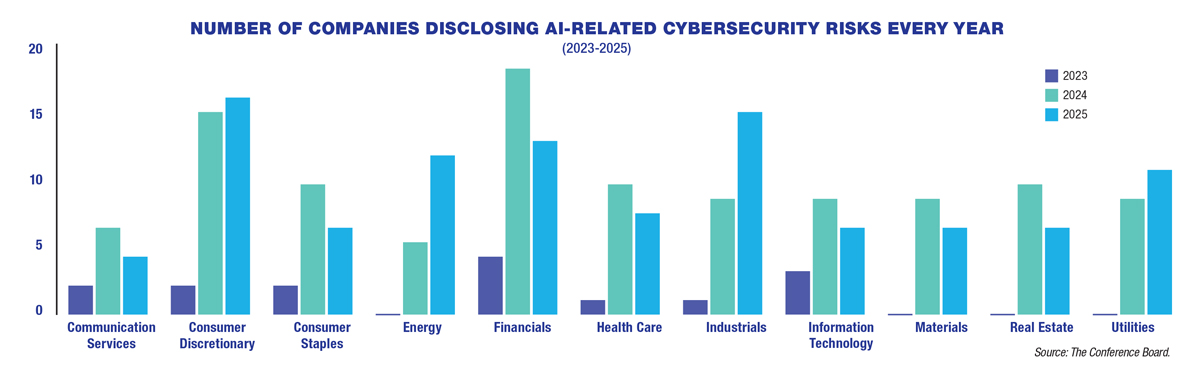

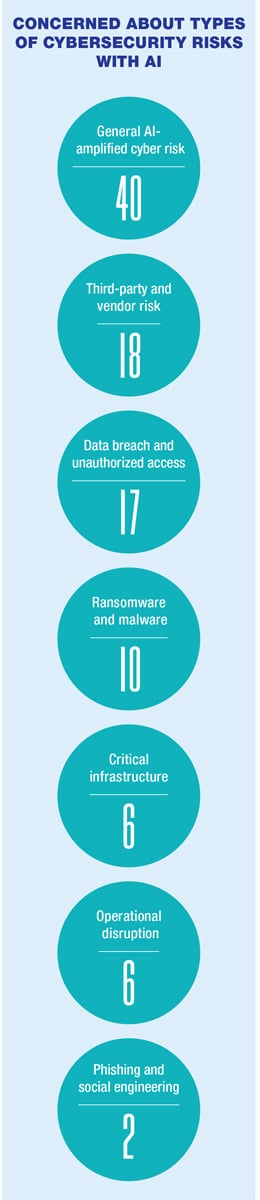

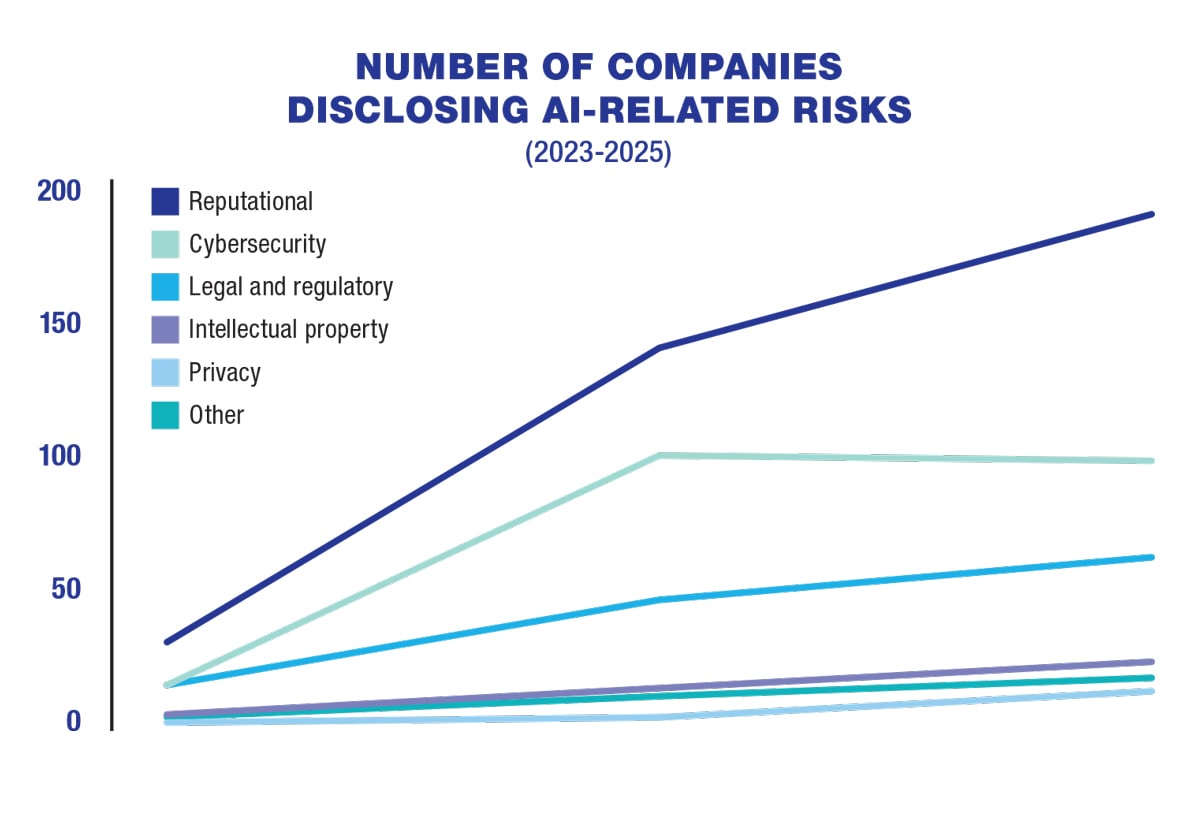

But as AI has seen rapid adoption across industries, it has also become a greater security risk for companies. A report published by The Conference Board in October found that

72% of Standard & Poor’s 500 companies disclosed at least one AI-related risk in their annual filings, up from 12% in 2023. The report also showed that 99 companies disclosed a cybersecurity-related AI risk in 2025, compared to 15 companies in 2023.

“The pace is so quick. In the span of two years, it’s gone from a niche new thing to suddenly every company having mainstream generative AI and other forms of AI,” said Andrew Jones, the principal researcher for the Governance & Sustainability Center at The Conference Board. “With that now comes all the extra potential for making money and business opportunities but also comes with a lot of baggage and risk.”

Flying blind

Flying blind

The risks surrounding AI make it yet another case study in how nascent and emerging technologies can only grow so far before the ancillary support systems catch up.

Though the risks highlighted in the Conference Board’s report analyze a slew of concerns – ranging from the reputational risks of failing to see AI projects through to legal and regulatory headwinds – cybersecurity is a clear throughline. The report found that 31% of the companies concerned with reputational risks cite potential issues surrounding privacy breaches and cybersecurity failures as a threat to the company’s standing with the public. No one wants to be the first company known for having a severe breach due to AI.

“What we’ve seen more of in terms of investor pressure and shareholder pressure is more on (using AI) responsibly,” Jones said “Do you have the right governance processes in place? How is your board overseeing it? What happens if there’s a huge reputational crisis?”

Companies are still experimenting with different use cases for the technology, while navigating a patchwork of ever-changing regulations – largely implemented to protect users’ information – by the European Union, the U.S. Securities Exchange Commission and a handful of states. However, President Donald Trump’s administration attempted to strike down actions taken by the states in its “One Big Beautiful Bill” by suspending states’ AI-related laws.

While the likes of the Family Educational Rights and Privacy Act and the Health Insurance Portability and Accountability Act – FERPA and HIPAA, respectively – have long guided education and health care-related companies, no overarching legislation exists for AI.

“Companies are very concerned about how they navigate that,” Jones said. “In the years to come, they’re going to have to worry about Europe. If they’re multinationals, they have to worry about complying with all these different state level laws.”

A new reality

Nick Mancini knows firsthand the process of grappling with emerging technology. He began working in IT when he was just 21 and was hired by a college professor. He has seen the business and enterprise side of IT and technology evolve over the last 42 years.

When Mancini started The Tech Consultants in 2006, his goal was to create a one-stop service that could architect and monitor a network of infrastructure, email and security compliance for businesses. Twenty years ago, the Woodland Hills-based company’s team of engineers and technicians lived on the road traveling to offices all over Southern California.

Today, many of them work from home, accessing the cloud remotely. Even on-site technology has become so reliable that daily and hourly reboots are rendered unnecessary.

“We have shifted our business model to provide support to our end users and to continue implementing best practices,” said Mancini. “But the best practices today are really focused around security, really focused around ensuring that a customer’s environment is as impenetrable as possible.”

Brute force cybersecurity attacks, by which an entity will attempt to find a weak point in the system and penetrate it, are less common these days. Today, phishing emails from a familiar bank or co-worker containing convincing AI-generated text or images is of greater concern for Mancini’s clients. A quarter (25%) of chief information security officers have reported an AI-powered cybersecurity attack in the last year, according to a July report published by the cybersecurity firm Team8. More than half – at 52% – reported increasing their cybersecurity budget in part due to AI-accelerated attacks on the network.

“It’s so much easier to get somebody on the inside to open the door for you,” Mancini said. “And that’s what has been happening for the last three, four or five years with phishing emails.”

Missed opportunities

AI itself proves to be a risk for companies. The Conference Board reported 45% of companies that disclosed AI cybersecurity risks pointed to vulnerabilities baked into working with third-party players, like cloud providers and software-as-a-service platforms. Companies rely on them as part of their enterprise kit, but they cannot safeguard against vulnerabilities they pose.

“They’re buying AI from cloud providers or from vendors and they don’t necessarily have much visibility into what those systems look like and where the data is sitting,” Jones said.

That makes AI difficult to penetrate certain highly regulated industries. For instance, the health care industry must comply with HIPAA regulations. The education sector relies on FERPA to protect student data.

Several companies have started to fill in the gaps and create specific AI tools that follow sector compliance regulations. Nectir, a Playa Vista-based edtech platform, allows schools to adopt large language models and use them in a way that is FERPA-compliant.

“What we give them is the infrastructure for them to go in and say, ‘We’re going to start with these assistants, we’re going to give it all of these data sets and it’s not going to use any of those files to train the LLM. It’s going to be completely private to our workspace in our campus,” said Kavitta Ghai, the co-founder and chief executive officer of Nectir.

The tragic reality of cybersecurity is that it is forever in an arms race with new technology. When it comes to AI, people like Mancini are battling it, well, with more AI. Mancini uses “a ton of AI” to automate certain processes like scanning the dark web for compromised email credentials.

AI is also often used to create programs that attack the system on the behalf of the network administrator to look for weak points that need to be addressed.

“They’re doing nothing but pointing it at each other, right?” Mancini said. “With the ability of people to develop their own GPTs and their own models based on easy-to-use tools, you can rather easily create a process or an application or a bot to go out and scour the internet to look for data and use that data for good or for bad.”